Datasets

To access and create datasets configs navigate to AI > Dataset.

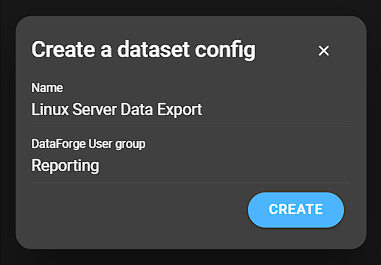

Create dataset config

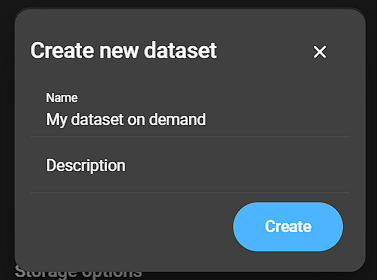

To create a dataset config, click the Blue plus button. This will open a form:

- Name: Provide a name for the dataset config.

- DataForge User group: Select a DataForge user group. Similar to the reporting feature, you’ll need to provide a user group, so that the service user can provide access to data on the Zabbix server.

Click Create to continue.

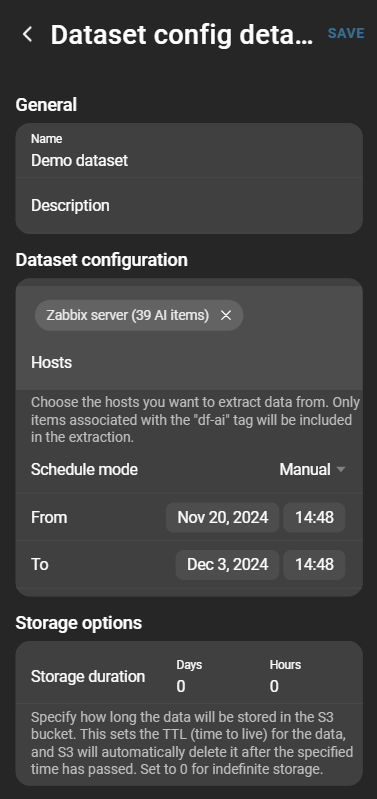

Configure dataset config

To further configure your dataset click on the dataset. This will open the dataset config details:

General

Here you can change the name of your dataset or add a description.

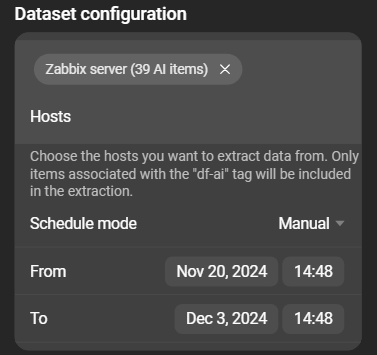

Dataset configuration

This configuration is split into two major categories:

- Hosts: The hosts from which the data is retrieved. Each host has an AI item count in parentheses next to the host name.

- Schedule: The schedule (interval) that determines the time span from which data for the dataset is processed and generated.

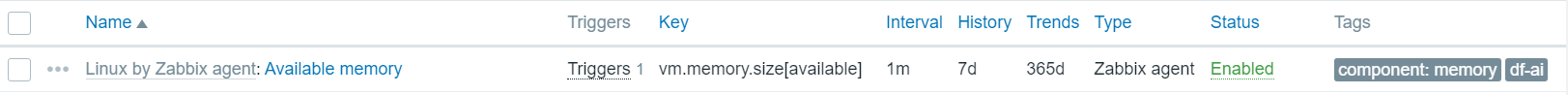

Hosts

When selecting one or more hosts, DataForge extracts the item histories from the host on your Zabbix server.

DataForge will only extract item histories from items that have the tag: df-ai. You must set this item tag manually for each item that is to be included in the dataset.

Schedule

There are two options to set up a schedule:

- Preset: Datasets are generated in a selected interval and are added to the list. The data recorded in the selected interval is taken into account for the respective data set.

- Manual: Set the start and end date and time manually. Only one dataset is created and only data from that time period is taken into account.

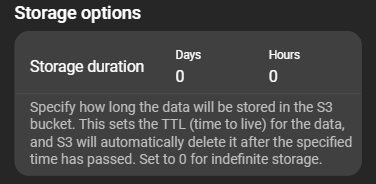

Storage options

The storage options can be used to organize your datasets. You can determine an amount of days and/or hours how long an individual dataset is saved. If a dataset is older than the given time, it will be deleted.

The default setting Days: 0, Hours: 0 allows you to keep your datasets indefinitely.

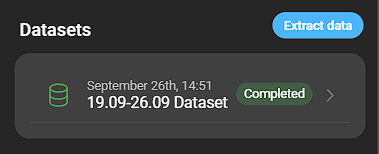

Datasets

A list of all created datasets and the option to create a dataset on demand.

You can create a dataset on demand by clicking Extract data. This will open a modal to give the dataset a name and description. To finish the process click Create.

Each dataset can be inspected further by clicking on it. This opens the dataset details.

Dataset details

If you want to inspect a dataset, navigate to AI > Dataset. From there select a dataset config that contains the dataset you want to inspect. The created datasets can be found at the bottom of the page. Click on the dataset you want to inspect.

Still extracting

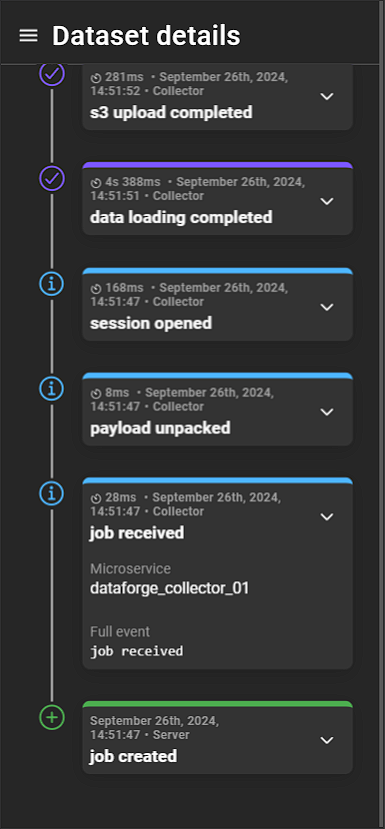

While the data is still extracting you can follow the current progress in real time in the job log.

Job log

The job log shows each individual job that is carried out to create the dataset. Each of these steps can be viewed in more detail by expanding the card.

- Processing time: How long the step took to complete.

- Date and Time: Indicates when each step was processed.

- Type: Specifies the type of event.

- Event: The name of the event.

- Microservice: The name of the microservice responsible for the event.

- Full event: Shows the full event stack trace.

Finished extracting

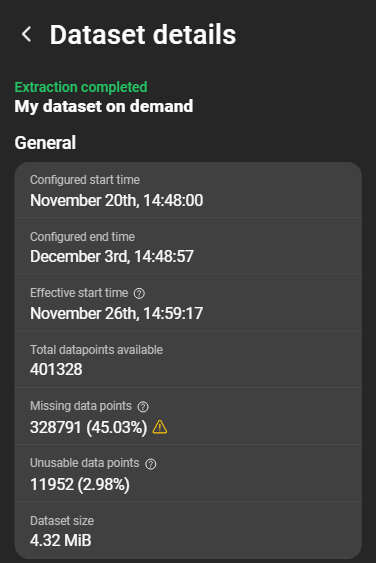

If the data extraction is completed, two additional section are displayed.

General

This section contains basic information about the dataset:

- Configured start time: The configured time at which the data extraction should start.

- Configured end time: The configured time at which the data extraction should end.

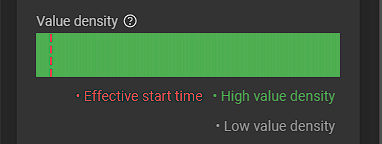

- Effective start time: The effective start time from when data is usable for training and testing. Due to technical limitation each item must have at least one value before the dataset becomes visible. Therefore values that exists before the first value of another series cannot be used.

- Total datapoints available: Number of datapoints we received from Zabbix.

- Missing datapoints: The total amount of datapoints that were expected to exist, but are missing in the time series.

- Unusable datapoints: The amount of data collected at the time when data was not yet available for each item. Since the items that have already collected data continue to be supplied with new data, those that cannot be used are discarded.

- Dataset size: The dataset size.

Dataset items

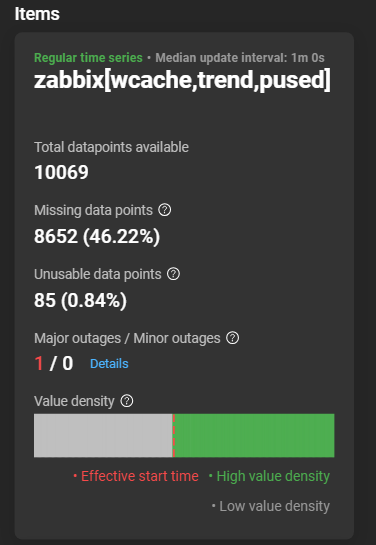

Detailed information about each extracted item:

Each card starts with an information about the time series and the item name.

An item can have two different time series states: Regular and Irregular. A regular time series has a regular update interval. If data is missing at an expected point, this is shown as a missing data point. With an irregular time series this cannot be determined and therefore no missing data points can be determined.

- Total datapoints available: Number of datapoints we received from Zabbix.

- Missing datapoints: Only available for

regular time series. Displays the total amount of datapoints that were expected to exist, but are missing in the time series. - Unusable datapoints: The amount of data collected at the time when data was not yet available for each item. Since the items that have already collected data continue to be supplied with new data, those that cannot be used are discarded.

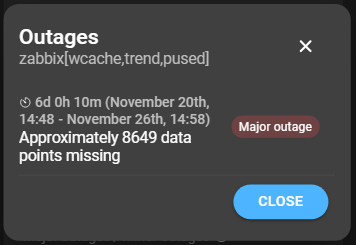

- Major/Minor outages: The amount of major and minor outages. Outages are identified when expected data points are missing in a regular time series. If missing data points exceed 5% of the total expected, it is considered a major outage. If outages were determined, a Details button offers more information about the matter.

- Value density: The value density measures the concentration of data points in your time series, with higher density indicating more data points. It also indicates the effective start time, the moment each item had at least one value.